This is part 1 of a 3 part blog series, read part 2 here

The State of Play

Enterprises are pushing forward with AI, but too often apply an ML mindset. The result is fragmented deployments, rising costs, and governance gaps. AI inference is not ML at scale, it requires a new operational model: InferenceOps.

In this blog, I’ll outline why the ML playbook once made sense, why it fails in the age of AI, how leading enterprises are adapting, and what a better operating model - InferenceOps - looks like.

Decentralized Inference: Why the Old ML Playbook Made Sense

The "ML playbook" refers to the common pattern where platform teams provided basic tooling and infrastructure for training and deployment, while individual use case teams used that tooling to train, deploy, inference, and manage their own models end-to-end. This playbook worked well for a few reasons:

- Bespoke and model-centric workflows: Each ML model was custom-trained for a specific use case, and the model itself was the product. Use case teams needed hands-on control to iterate and improve it, which meant there was little value in centralising models since no two teams needed the same one.

- Computationally Inexpensive: ML models are relatively computationally inexpensive - typically running inference very comfortably on CPUs. This made deployments inexpensive and straightforward for most use cases meaning optimization typically isn't worth the cost of effort.

Given these characteristics of ML inference, it made sense for inference to be done by the use case teams. Decentralisation of inference was logical, and platform teams simply needed to provide the tools to do it.

Why That Playbook Breaks for AI

AI Inference is fundamentally different from ML Inference, and the decentralised inference playbook breaks down. Why?

- General-purpose backbones: Foundation models are not highly specialized - a single llama model can power dozens of use cases within an organization. Even when these models are fine-tuned with lora/PEFT adapter the bulk of the model 'backbones' are still reusable. Having each team spin up its own deployment wastes resources and creates redundancy.

- Expensive inference: AI models are multiple orders of magnitude more computationally expensive than ML models. AI models require expensive GPUs and so highly optimized deployments and high utilization matter - otherwise costs can quickly spiral with redundancies.

- API-centric workflows: Most use case teams don’t need deep access to models anymore. Their levers are prompting, orchestration, and integration - not retraining. The model APIs serve their needs, they don't need to have the model weights as well.

- User facing and Real time: Because more AI applications are highly visible and user facing poor latency, throughput, uptime, or guardrailing can be h. Non-standardized deployments risk inconsistently applied governance, reliability, and performance.

Running AI Inference at Enterprise Scale: InferenceOps

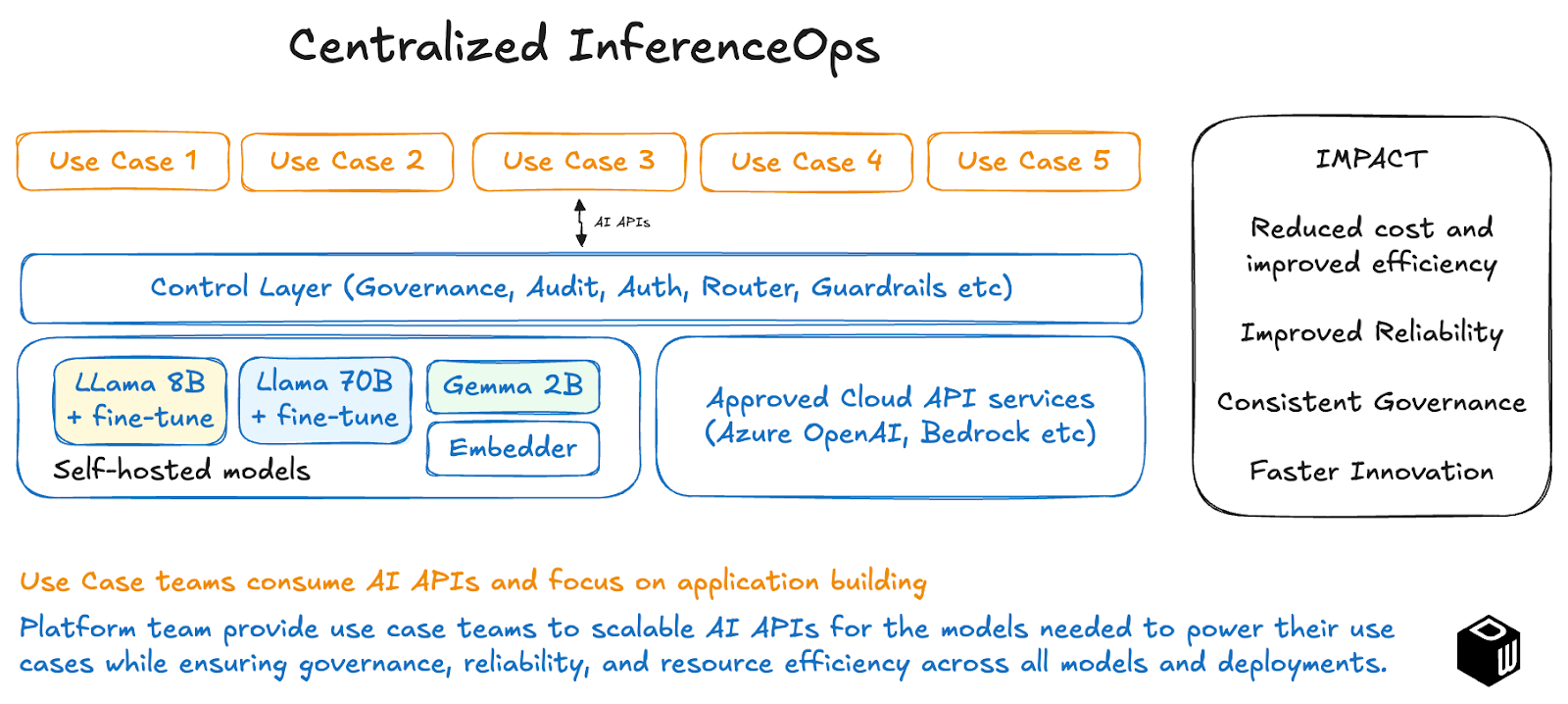

Enter InferenceOps: the new function every enterprise adopting self-hosted or multi-provider AI will need to establish within their platform team. InferenceOps is a central capability responsible for delivering scalable, reliable, and governed AI APIs to downstream use case teams.

This setup lets use case teams innovate freely, while the InferenceOps team ensures efficient GPU utilization, consistently high uptime, and organization-wide governance. It’s a model already proven by Tier-1 banks and leading tech companies, which have converged on centralized inference platforms that expose APIs to their development teams.

The benefits to the enterprise of adopting this method of running AI inference is significant:

- Efficiency: Deploy one model to be shared to eliminate redundant deployments and wasted GPU spend.

- Reliability: Deliver enterprise-grade latency, throughput, and uptime by allowing the inference center of excellence to deploy on highly optimized AI inference infrastructure.

- Governance: Apply consistent guardrails and compliance policies across the board.

- Speed of innovation: Free use case teams to focus on building value, not managing infrastructure.

In ML, inference was an afterthought. In AI, inference is the bottleneck. Enterprises that maintain the decentralised MLOps-inspired playbook will overspend and underdeliver. Those that invest in centralized InferenceOps now will build the backbone for sustainable, enterprise-wide AI adoption.

Read the next part of the blog series here where I explain more about what this InferenceOps function will look like and be responsible for.

Footnotes