Inference at Scale

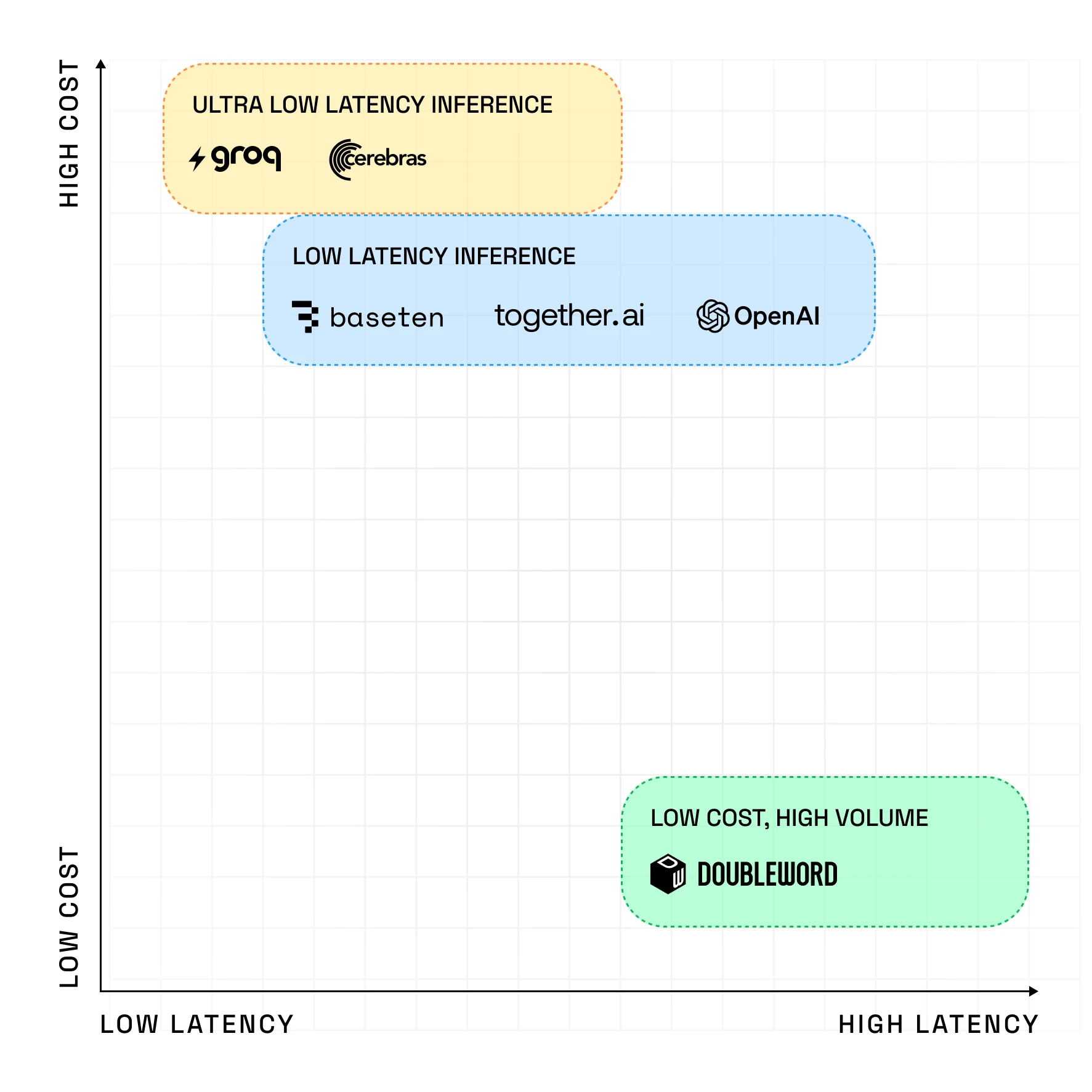

Inference large scale jobs for a fraction of the cost of other inference providers. Doubleword Batch is an inference stack built from the ground up specifically for batch workloads - optimizing hardware, runtime, and orchestration exclusively for cost efficiency and reliability.

Manage Inference across your business

Stay in control of every model, deployment, and API - across teams, jurisdictions and clouds. The Doubleword control layer gives you centralised visibility and governance across all AI usage - both your private deployments and cloud APIs like OpenAI and Bedrock. With built-in auth, RBAC, intelligent logging, and usage metering, your teams stay compliant and secure.

Inference in your private environment

Run open-source and custom language models at scale, in your private cloud, on-prem, or hybrid infrastructure for your most sensitive use cases.

Deployed as infrastructure-as-code, we pair state of the art inference engines with our custom-built scaling layer to create scalable OpenAl compatible APIs within your environment.

Our customers focus on Delivering Value, not managing infrastructure

Stop overpaying for inference.

Doubleword Batch is now live.

Run batch and async workloads at significantly lower cost - with 1-hour and 24-hour SLAs.

Free credits available to get started.

.png)