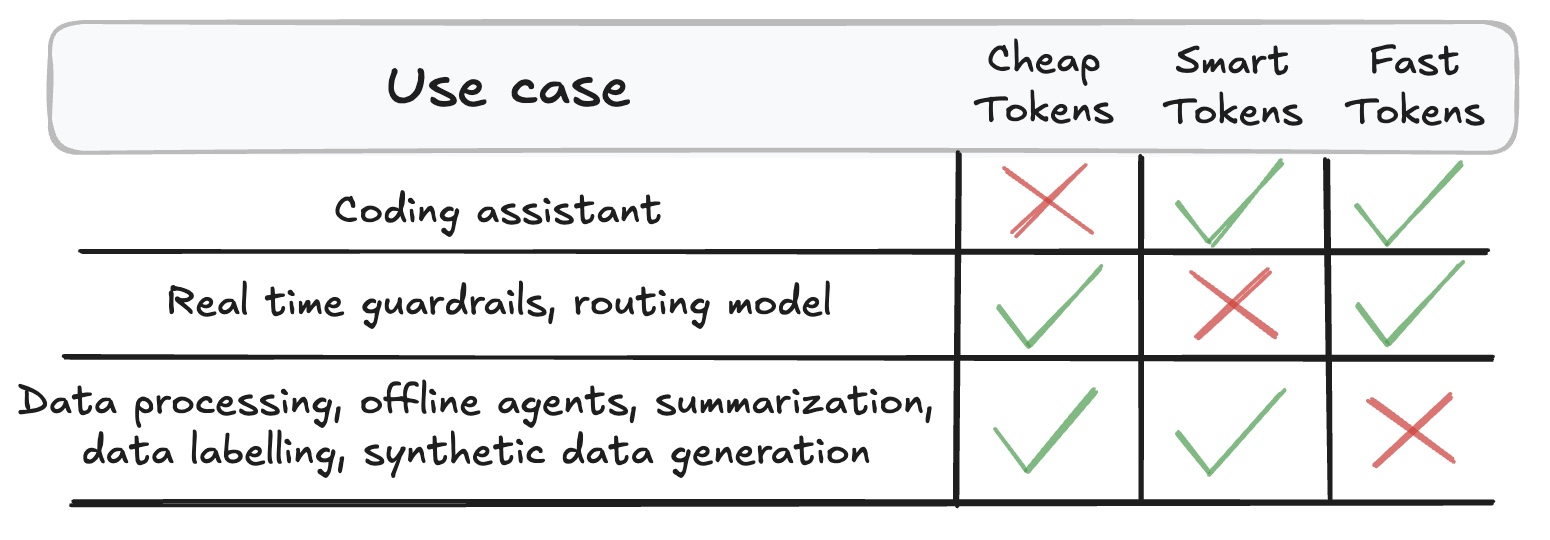

Inference is a game of trade offs. It’s a trade off between latency, cost, and model performance. And normally you can only pick two out of the three. You can either have: smart fast and expensive tokens, stupid fast and cheap tokens, or smart slow and cheap tokens.

There is no wrong trade off, there is only the wrong trade off for the job.

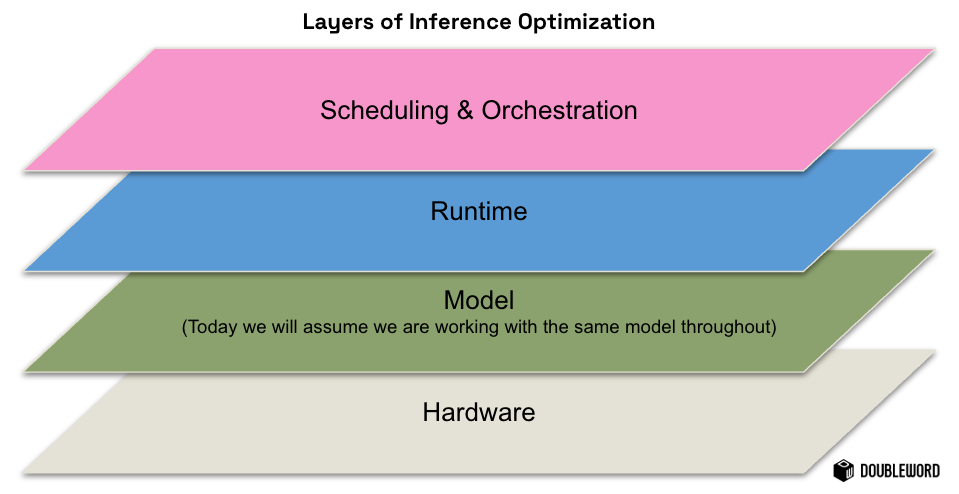

For each trade-off that you decide to make you make different optimizations at every layer of the stack. I tend to think of these choices at four levels:

- Hardware

- Model (Big or small model? Quantized? MoE? Evals?)

- Runtime (How does the model run on a single pod)

- Scheduling and Orchestration (How do you orchestrate requests and scaling over multiple pods?)

The inference industry does not cater to high volume use cases that demand low token prices

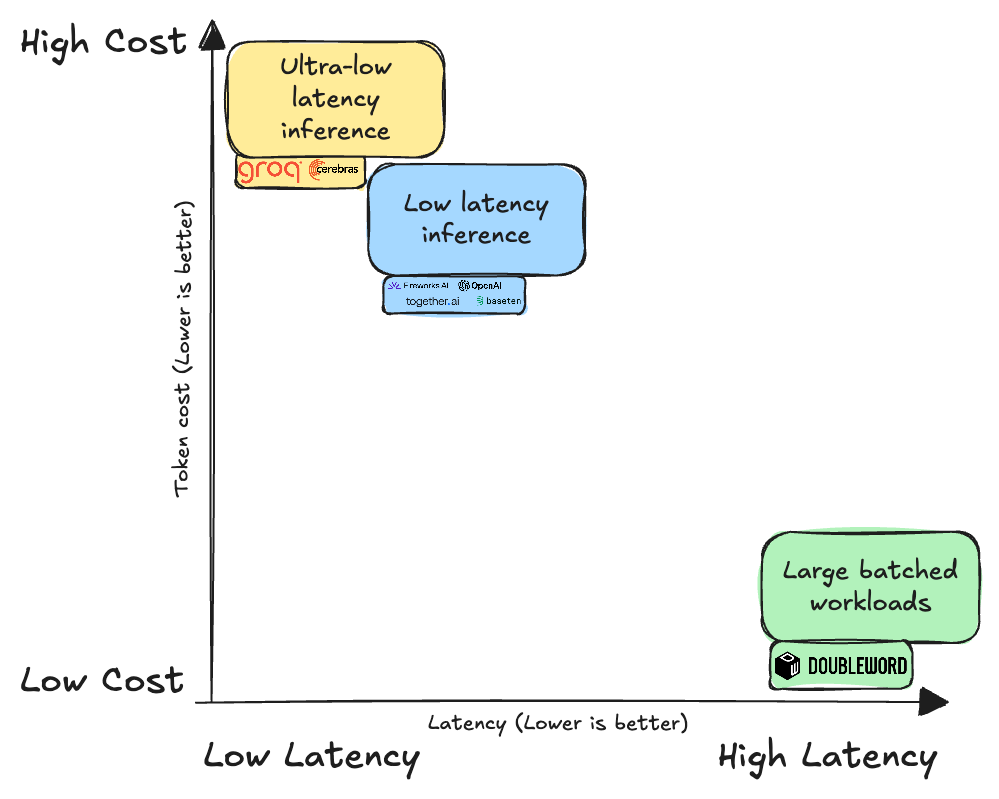

The AI inference ecosystem is currently optimized top-to-bottom for low latency. The most visible providers - from Groq and Cerebras to Fireworks and OpenAI - are competing to deliver the fastest tokens possible, often using specialized hardware like the WSE, low batch sizes, or runtimes optimized for speeds.

This is great for real-time use cases like code generation. However, it completely misses the mark for what we estimate will be the majority of long-term token demand: high-volume use cases where users are happy to wait a bit in exchange for dramatically lower costs.

The result is that users running critical, non-real-time workloads - like offline agents, data processing, and large-scale summarization - are facing an experience that was not built for them.

The Three Critical Issues

The industry's focus on low latency endpoints has created three major pain points for high-volume users:

- Tokens are too expensive: Existing providers offer token discounts (e.g., 50% off for a 24-hour SLA). But these "batched" options are often just a way to smooth demand on infrastructure designed for real-time use. If you were to design an API to optimize for cost from the ground up, the discount should be far, far bigger than 50%.

- Batched DevEx is a second-class citizen: Because the providers' core business is real-time APIs, their batched endpoints are poorly maintained. Developers regularly experience failed or expired batches and a user experience that hasn't been optimized for their workflows.

- SLAs don’t reflect use case demands: Most providers only offer a rigid 24-hour SLA. If your use case requires a response in 1 hour or 4 hours (a non-real-time but time-sensitive need) , you have no choice but to pay full price for a real-time API just to guarantee the response time.

Doubleword Batched: The Costco of Inference

At Doubleword, we saw the problem: high-volume, cost-sensitive inference was being underserved. So, we built the inference experience for non-real-time workloads from the ground up.

Doubleword Batched is an inference service purpose-built for producing the cheapest tokens. We achieve this by applying deep inference optimization skills at every layer of the stack - Hardware, Runtime, and Orchestration - to maximize cost efficiency over latency.

Think of us as the Costco of inference. We pass the savings from our cost-optimized stack directly onto you, our customer:

- The Cheapest Tokens on the Market: We are currently 4x cheaper than real-time APIs and 2x cheaper than existing batched APIs. We are not running at a loss; we are offering these costs because we optimized our stack exclusively for cost/token.

- Adhered-to 1-Hour and 24-Hour SLAs: We offer SLAs that actually make sense for your non-real-time requirements. Unlike other providers, we commit to these guarantees, and if the SLA is not met, you get your money back.

- First-Class DevEx: We treat batched inference as a priority, ensuring an intuitive developer experience that allows you to get your responses back as they process.

Doubleword Batched is open in private preview

We’re excited to announce that Doubleword Batched is now open in private preview for teams who:

- Are using Inference providers batched APIs for their use cases

- Or are working on use cases that are non-real time that can be satisfied by either a 1 hour or 24 hour SLA

Request access today at here

Footnotes

1 We know this well, in fact our first technology in 2022 was a form of quantum inspired fine-pruning that created thousands of variants of the original model each at different model parameter sizes. What this allowed our customers to do was ‘fill in the gaps’ between the available model sizes so customers could have total choice over the accuracy / latency trade off they were making. You can see a lecture that our co-founder and head of research did on this here and you can check out an example of this plot here.